Result This release includes model weights and starting code for pre-trained and fine-tuned Llama. This jupyter notebook steps you through how to. We will use Python to write our. Result Were excited to announce that well soon be releasing open-source demo. Result This release includes model weights and starting code for pre-trained and fine-tuned Llama. . Examples and Playground Try It Yourself Be on the Right Side. Result Llama 2 70B AgentTool use example This Jupyter notebook provides examples of how to..

. Llama 2 is a family of state-of-the-art open-access large language models released by Meta today and were excited to fully. This is a simple HTTP API for the Llama 2 LLM It is compatible with the ChatGPT API so you should be able to use it. Were unlocking the power of these large language models Our latest version of Llama Llama 2 is now accessible to. The goal of this repository is to provide a scalable library for fine-tuning Llama 2 along with some example scripts and notebooks. This release includes model weights and starting code for pre-trained and fine-tuned Llama language models ranging from 7B. Were excited to announce that well soon be releasing open-source demo applications that utilize both LangChain and. Get up and running with Llama 2 Mistral Gemma and other large language models..

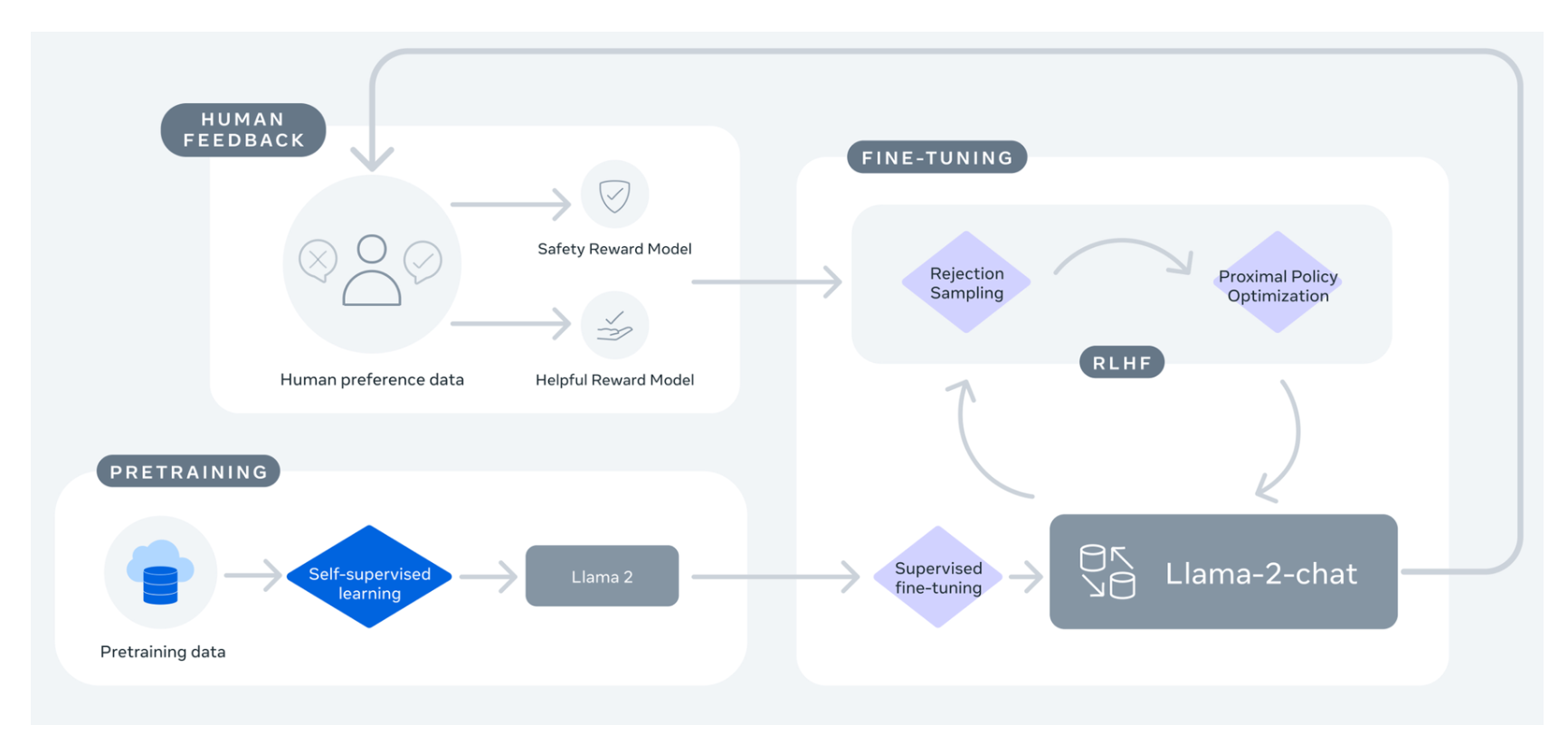

We present QLoRA an efficient finetuning approach that reduces memory usage enough to finetune a 65B parameter model. Result This release includes model weights and starting code for pre-trained and fine-tuned Llama language models ranging. Result Llama 2 is a collection of pretrained and fine-tuned generative text models ranging in scale from 7 billion to 70 billion. Meta developed and released the Llama 2 family of large language models LLMs a collection of pretrained and fine. Result Introducing Llama 2 70B in MLPerf Inference v40 For the MLPerf Inference v40 round the working group decided to..

What are the hardware SKU requirements for fine-tuning Llama pre-trained models Fine-tuning requirements also vary based on amount of data time to complete fine-tuning and cost constraints. In this part we will learn about all the steps required to fine-tune the Llama 2 model with 7 billion parameters on a T4 GPU. In this article we will discuss some of the hardware requirements necessary to run LLaMA and Llama-2 locally There are different methods for running LLaMA models on. Key Concepts in LLM Fine Tuning Supervised Fine-Tuning SFT Reinforcement Learning from Human Feedback RLHF Prompt Template. Select the Llama 2 model appropriate for your application from the model catalog and deploy the model using the PayGo option You can also fine-tune your model using MaaS from Azure AI Studio and..

Comments